Neural networks are usually overconfident which results in losses when buying and selling; easy fashions can underfit, however layered approaches make up the distinction

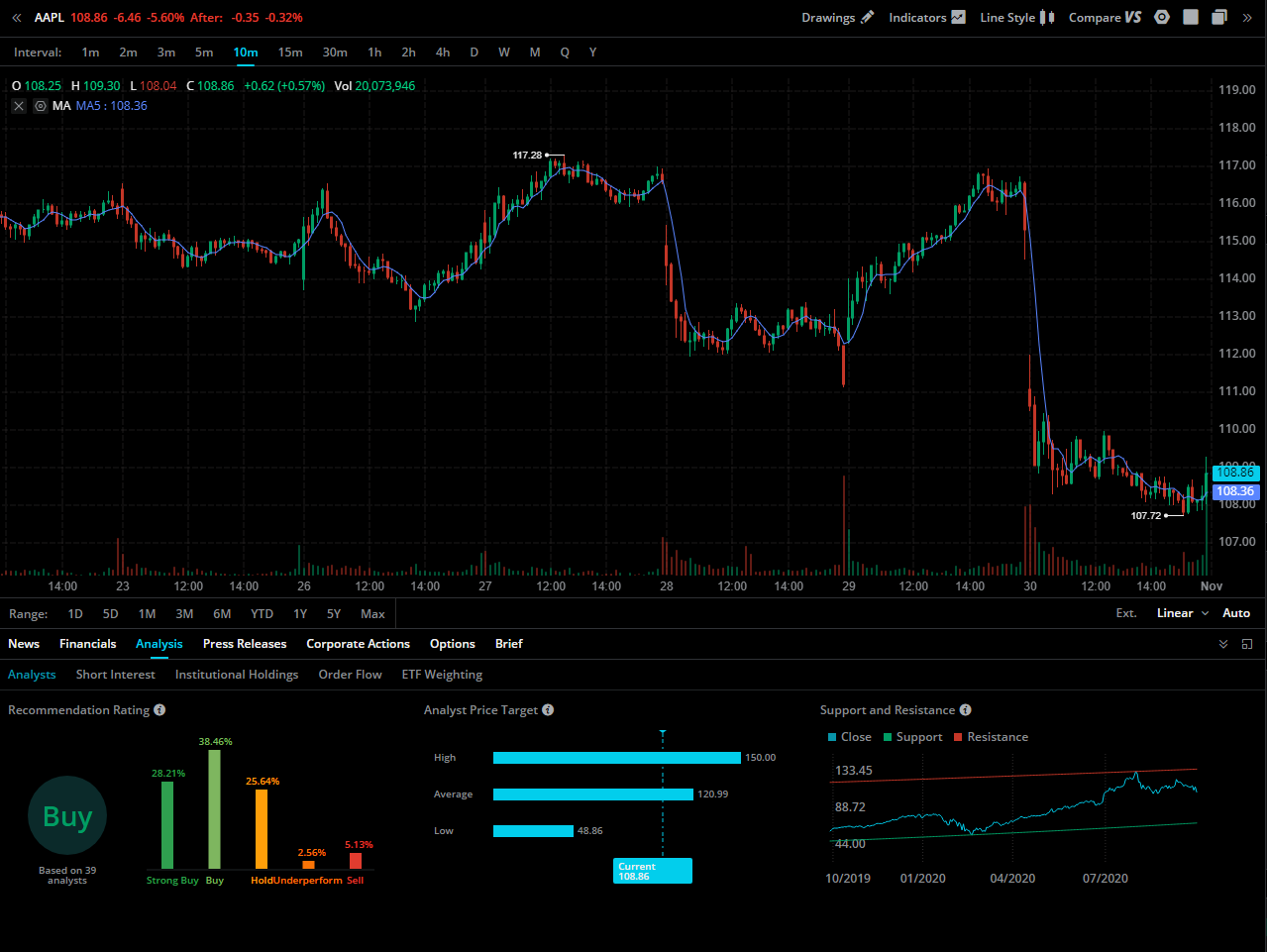

Trading in an environment friendly, complicated market isn’t a cats versus canine downside. Markets have quick knowledge sequence, are noisy, are random at occasions, and aren’t managed underneath tight bounds. Worth motion isn’t even nicely understood by people.

When 90% of human merchants fail to generate income within the inventory market, how is one mannequin — even when it’s a neural community — speculated to generate income, and never overfit, when markets aren’t nicely understood, non-deterministic, noisy, and have quick knowledge sequence?

Popularized purposes of neural networks have comparable tales: recognized guidelines (like chess or speech recognition), deterministic (operated machines), achieved nicely by people (picture classification), and plenty of related knowledge (machine translation).

Easy, layered approaches are perfect for producing good commerce indicators. In a latest e-book titled Advances in Monetary Machine Studying, Marcos Lopez de Prado, 2019 quant of the yr, talked about layering approaches when he mentioned meta labeling.

Meta labeling is a solution to layer dimension selections (1K heaps, 50K BBLs) on prime of course selections (lengthy or quick). For example, first construct a major mannequin to foretell a optimistic class nicely, then construct a second — meta — mannequin to distinguish regimes when the first mannequin isn’t right. Use the course from the first mannequin to put your guess; use the boldness of the meta mannequin to dimension your guess.

To be fully clear, it’s not as clear to me whether or not the identical fashions and indicators used to generate indicators needs to be used when exiting a place. I’m nonetheless on a quest to study this. When you have ideas on this level, then I might like to see your remark beneath.

Find out how to dimension a guess with chances: Merely multiply a chance by your required max place. Then map that dimension to a step operate, to cut back small changes to a previous guess.

Neural networks are a sort of algorithm. They fall underneath the camp of machine studying. They had been first launched within the 40s and went out and in of recognition between the 60s till at this time.

Neural networks are versatile, scalable, and highly effective, which makes them very best for giant, complicated (even non-linear) machine studying duties: pc imaginative and prescient, phonetic recognition, voice search, conversational speech recognition, speech and picture function coding, semantic utterance classification, hand-writing recognition, audio processing, visible object recognition, data retrieval, within the evaluation of molecules that will result in discovering new medicine, classifying billions of pictures, recommending one of the best movies to look at to a whole bunch of tens of millions of customers daily, studying to beat the world champion within the sport of Chess or Go, and even powering widespread machine studying duties when there’s lots of knowledge.

I pulled the above paragraph from a PowerPoint slide deck I made in graduate faculty. Does any of that sound like finance and buying and selling? “Giant, complicated,” and “lots of knowledge” aren’t phrases I hear usually.

A few of my favourite fashions are the shallow and easy ones: Naïve Bayes, logistic regression, choice timber, and help vector machines. You need to use them as binary classifiers. Binary classifiers, at occasions, will be extra helpful than steady regression predictors.

Binary predictions are favorable as a result of they emulate commerce selections: lengthy or quick. You need to use the chances these fashions produce that will help you dimension your guess, which can be favorable.

Naïve Bayes

Primarily based on Bayes Theorem, Naïve Bayes makes use of prior chances based mostly on historic knowledge to make possible predictions on future knowledge. You need to use these chances to make predictions by making a threshold, say 50%. Any predicted chance beneath 50% will turn out to be a brief guess; any predicted chance above 50% will turn out to be an extended guess.

from sklearn.naive_bayes import GaussianNBclf = GaussianNB().match(X_backtest, y_backtest, w_backtest)predictions = clf.predict(X_live)chances = clf.predict_proba(X_live)[:, 1]

Logistic Regression

You may consider logistic regression as a linear equation that scales predictions between binary lessons by way of a non-linear operate. In the long run, what you get is a prediction, lengthy or quick, and a chance that will help you dimension.

from sklearn.linear_model import LogisticRegressionclf = LogisticRegression().match(X_backtest, y_backtest, w_backtest)predictions = clf.predict(X_live)chances = clf.predict_proba(X_live)[:, 1]

Resolution Tree

Resolution timber use necessary market drivers to optimize commerce selections based mostly on driver ranges. They optimize commerce selections by discovering favorable skew (a.ok.a., chances). In a binary case, you get lengthy and quick predictions based mostly on regimes (or choice paths) and a predicted chance.

from sklearn.tree import DecisionTreeClassifier as DTCclf = DTC().match(X_backtest, y_backtest, w_backtest)predictions = clf.predict(X_live)chances = clf.predict_proba(X_live)[:, 1]

Help Vector Machine

Just like linear regression, a help vector machine is a linear algorithm, however opposite to linear regression, they search to separate lengthy and quick historic observations with as vast of a spot as potential. That is helpful as a result of we wish to practice algorithms to distinguish lengthy and quick bets. This algorithm additionally produces a predicted chance, whereas linear regression doesn’t.

from sklearn.svm import SVCclf = SVC().match(X_backtest, y_backtest, w_backtest)predictions = clf.predict(X_live)chances = clf.predict_proba(X_live)[:, 1]

The aim of a layered method is to cut back the power of a poor prediction and to amplify the power of an excellent prediction by ensembling easy, numerous fashions. Every easy, numerous mannequin has strengths and weaknesses. By combining them, we hope to dimension up a place as all fashions are in alignment, thus rising our confidence.

This method is favorable as a result of sizing is the place most individuals get tousled. If we all know the course a market goes, however we don’t dimension nicely, then we are able to nonetheless find yourself dropping cash. If we have no idea completely the course a market goes, however we dimension our bets based mostly on doubtless outcomes, then we’ve got a greater likelihood at making good cash when markets transfer, and dropping much less cash when markets aren’t shifting. The sum is optimistic.

Layered approaches are favorable in buying and selling. Neural networks are a cool class of algorithms, however they’re overconfident with few observations.

Hope you loved. Let me know you probably have questions.

[1] D. Gillham, Buying and selling the Inventory Market — Why Most Merchants Fail (2020), Wealth Inside

[2] A. Rodriguez, Deep Studying Methods: Algorithms, Compilers, and Processors for Giant-Scale Manufacturing (2020), Morgan & Claypool

[3] M. Lopez de Prado, Advances in Monetary Machine Studying (2018), Wiley